Beware of AI black industry chain "making yellow rumors" in batches

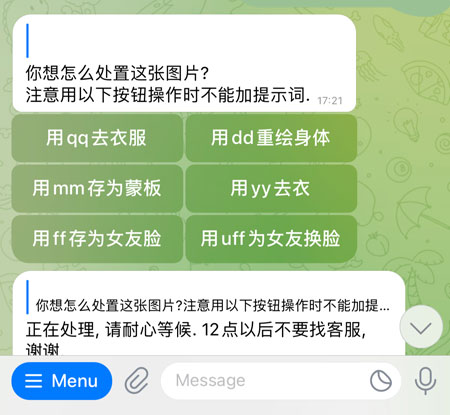

P-robot has many functions. Photo courtesy of respondents

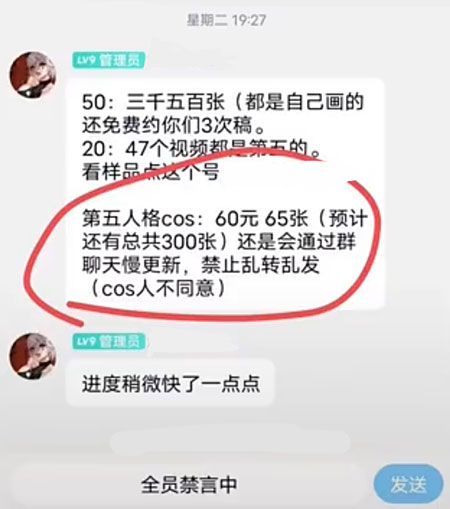

Criminals peddle AI atlas on the Internet. Photo courtesy of respondents

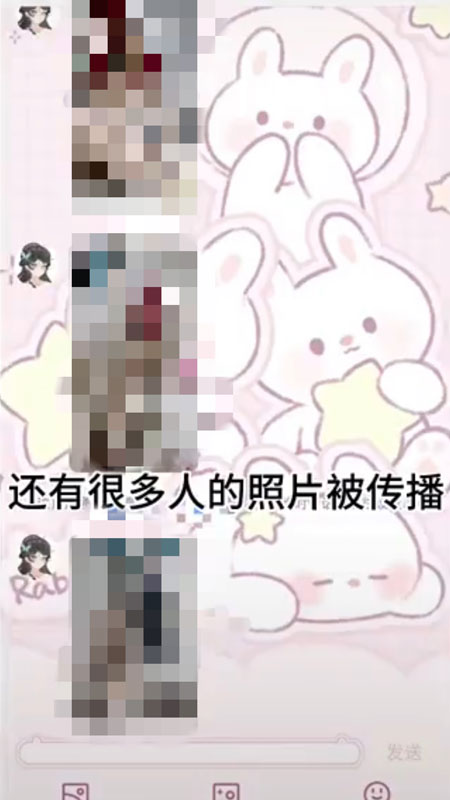

Many victims were p-pictured by criminals. Photo courtesy of respondents

On June 27th, the Central Network Information Office issued the Notice on Launching the Special Action of "Qinglang 2023 Summer Minors’ Network Environment Renovation", and launched the two-month special action of "Qinglang 2023 Summer Minors’ Network Environment Renovation" (hereinafter referred to as "Special Action"). In particular, it is pointed out that we should pay attention to the risks of new technologies and new applications, such as the generation of vulgar pornographic pictures and videos involving minors by technologies such as "AI face changing", and we should focus on rectification.

— — — — — — — — — —

Today, "AI undressing" technology has generated a black industrial chain. Upstream criminals generate pictures through AI technology, integrate these pictures, package them into an atlas, and sell them to downstream buyers through complete sets of sales. Some people set up a "paid membership group" for this purpose, which makes some innocent people and even minors fall into the whirlpool of "yellow rumors". The reporter of China Youth Daily and China Youth Network conducted an investigation and interview.

The picture of "AI undressing" was publicly sold.

"It’s really my face in the picture, but here I am … … Why are you naked? "

In May this year, Li Yi (pseudonym), who was still in college, received a picture sent to her by a netizen. After reading it, she was angry and scared.

Li is also a Cosplay blogger, who often shares photos of himself playing various anime roles on social media. Later, she and netizens found Luo Fan (net name) who made nude photos. Luo Fen admitted that he put the photos into an AI (Artificial Intelligence) software and could "take off" Li Yi’s clothes in less than a few minutes. On the Internet, Luo Fen publicly peddles pictures at the price of one in 5 yuan, claiming that 60 yuan can package and buy "nude photos" of more than 60 bloggers, including photos of minors.

"I have never taken such a photo!" Angry Li also began to collect evidence of the other party’s illegal behavior using AI, with the intention of investigating its legal responsibility, so she added friends with Luo Fan as a buyer.

Luo fen does not directly solicit business with the word "AI undress", but implicitly expresses that she is "changing the picture". "Little sister, here comes Cosplay ‘ Change the map ’ , 5 yuan. " When Li further asked him how to "change the picture", Luo said vaguely that he used AI technology to generate various Cosplay pictures.

Li also found a clue from a group chat formed by Luofen. Here, Luofen not only continues to provide the service of "changing pictures", but also publishes a large number of suggestive and provocative pictures, which are publicly sold in the form of atlas, saying that 60 yuan can buy 65 pictures, and "it is estimated that a total of 300 pictures will be updated slowly through group chat".

"Who is this?" Li also found his own "nude photos" and went to ask Luo powder to understand. "This is clotho (the character name) wearing no clothes, and the blogger himself let it be filmed like this." Luo powder replied, and hinted in a secret way, "Don’t mess around, because more than 60 bloggers involved in the set of pictures don’t agree."

"He said it like we bloggers took large-scale photos ourselves!" Li is also very angry, revealing his identity and confronting Luo Fen.

"I’m sorry, sister!" Luo Fen admitted that she "re-created" Li Yi’s picture. Li also said that this is not the normal scope of "secondary creation", but an infringement of her personality right.

Luo Fen argued that these series of pictures were not made by him, "they were made by others". Luo Fen didn’t realize the seriousness of this matter and discussed with Li Yi, "My card balance is only 1200 yuan. Can you compensate you for 650 yuan’s personal settlement?"

"It’s not about money. You have broken the law." Li Yi and other bloggers chose to call the police.

Black industrial chain harms minors.

Li Yi’s experience is not a case. AI technology has been abused and a black industrial chain is taking shape.

On the internet, as long as you enter keywords, you can easily find businesses that provide "AI undressing" related technologies or services. They solicit business on social media under the banner of "AI painting" and "AI changing pictures", and guide customers to add their contact information. After one-on-one communication or private group chat, they will come up with suggestive pictures to induce netizens to buy.

There are many victims of "AI undressing", even harming minors. A seller claimed that he was "rich in resources" and sent a complete set of price lists, which divided the atlas into such categories as "stars", "online celebrity", "models" and "cartoons" in detail. In the sample pictures he sent, there were even high school students in school uniforms. He claimed that if there is no favorite type, customers can send photos of "single picture customization" for two yuan each, and "all pictures on the Internet can be used as material".

Another seller also invited customers to join the group by membership, saying that at present, "there are already two or three hundred atlases in the group", and as long as you spend 30 yuan to join the group, you can enjoy free continuous updates. When the reporter of China Youth Daily and China Youth Network further questioned the source of his atlas, he faltered that his resources were downloaded from a website, "I didn’t do it myself".

"In fact, as long as you master the relevant technology, people can use AI to make these pictures." Algorithm engineer Lantian, a senior reporter, told the reporter of China Youth Daily and China Youth Net that the "AI undressing" algorithm is called "DeepNude" and has been removed from the shelves in the United States because of ethical problems, but it is not excluded that some people train machines through other AI algorithms to achieve the same effect.

In a communication software, an AI robot has gained many users. It doesn’t need the user to master the complicated algorithm knowledge, but only needs to follow its prompts to complete the operations of "changing face" and "undressing" for photos. This robot also has detailed fine settings, which can adjust the parameters of the picture like a beauty camera.

This robot needs to be used for a fee. After clicking the "recharge" button, the user will be guided to a card recharge website for online payment. However, users do not pay merchants, but individuals, so it is difficult to track the operating subject of the robot. According to its price list, a strip photo can be generated at a minimum of two yuan.

"The machine is neutral, just give it ‘ Hello ’ With enough human body structure maps, the machine can redraw the whole map by recognizing features such as face and body, and generate a ‘ Stripping photos ’ 。” Blue sky said that the machine "only cares about the generation of photos" and will not take into account the wishes of the people in the photos and ethical and legal issues such as whether they are adults.

"According to the perspective of technology instrumentalism, technology is neutral and harmless, but this understanding ignores the moral relevance that technology should have." Xie Ling, an associate professor at the School of Criminal Investigation, Southwest University of Political Science and Law, and a tutor for master students, believes that there are differences between technologies, and the consequences of the abuse of some new technologies, such as deep forgery technology, are hard to predict, and their ethical and legal issues are more complicated.

Constructing network security rules from the principle of "most beneficial to minors"

Lawyer Yao Zhidou, a partner of Beijing Jingshi Law Firm, has been exposed to many cases of infringement of minors’ rights and interests due to the abuse of new technologies. In a case, a criminal used the "AI Face Change" software to replace the facial information of a large number of underage students collected from Internet channels, made a false face-changing obscene video, and then sold the video for a fee. "This not only infringes on the portrait rights and mental health of underage students, but also is suspected of spreading obscene articles for profit."

"To cope with the risks of new technologies and new applications, we need to learn from ‘ Most beneficial to minors ’ Principles and ‘ Children’s rights ’ To build security rules from the perspective of. " Guo Kaiyuan, an expert in juvenile legal research at China Youth Research Center, believes that in view of the risks brought by new technologies and new applications, it is necessary to grasp the dynamic balance between the special protection of minors and the application of new technologies. Relevant departments should set up rules to protect children, respect privacy and set up special protection as soon as possible, especially the rules of grading management according to age standards and the rules of informed consent of guardians.

Xie Ling called for the classification and evaluation of the application risks of new technologies, the establishment of an institutional barrier for the regulation of new technologies, and the definition of the boundaries of rational use of technologies. If there is no barrier against technology abuse, the networking application of this kind of high-risk new technology should be strictly regulated, because "new technology is very likely to lead to new illegal behaviors in the future".

Yao Zhidou said that it is difficult to supervise cybercrime. The evidence of cyber crime is mainly stored in the network server, and it is difficult to obtain evidence in the face of complex and diverse electronic data. In addition, it is difficult to determine the identity of the offenders, and some criminals are abroad, which brings challenges to the jurisdiction of the case.

In fact, in view of the risks brought by new technologies and applications on the Internet, relevant departments have stepped up efforts to rectify them.

One of the seven focuses of the special action of the Central Network Information Office is the risk of new technologies and applications, including the use of technologies such as "AI face changing", "AI drawing" and "AI one-button undressing" to generate vulgar pornographic pictures and videos involving minors; Use the so-called "burn after reading" secret chat software to trick minors into providing personal information and induce illegal activities; Using generative artificial intelligence technology to produce and publish harmful information about minors.

"This has released the signal that the state has stepped up efforts to rectify the network environment and effectively strengthen the network protection for minors." Xie Ling pointed out that this special action is aimed at the vulnerable characteristics of minors’ body and mind, aiming at preventing minors from using the Internet badly, and preventing them from becoming "tools" of new network illegal and criminal activities. It is also a concrete manifestation of implementing the law on the protection of minors and the relevant legal protection measures of the law on the prevention of juvenile delinquency.

Now, Li has also stepped out of the psychological shadow. She said, "I can’t stop loving life because of these things."

Zhongqingbao Zhongqingwang Trainee Reporter Liu Yiheng Reporter Xian Jiejie